5

Hypermedia

"Being multimedia is not quite enough for a program to be hypermedia.

[Nielsen, 1989, p. 5]

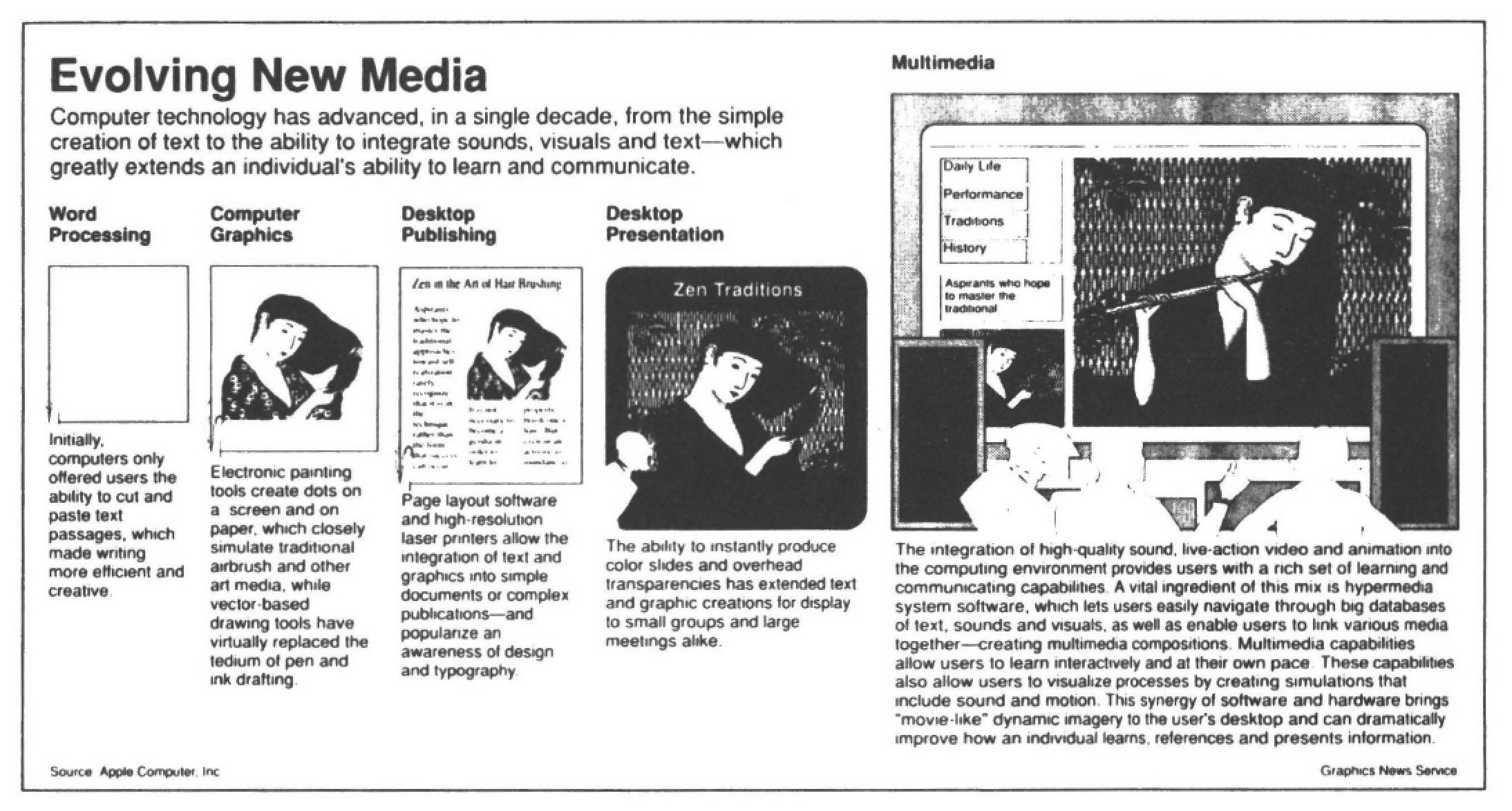

The word "multimedia" is one of the most overhyped in today's computer vernacular. Any application that manages to create even the most rudimentary mixture of text, pictures, or sound is likely to be dubbed by its marketers as a multimedia application.

The term "hypermedia" suffers from the same affliction. Most of the popular hypertext authoring systems on the marketplace today claim the ability to add still and moving graphics, audio, and software to the mix. Books have always had pictures in them – are they hypermedia applications too.

A computer program that runs in color with few pictures and beeps is not necessarily a multimedia application; a multimedia application is not necessarily a hypermedia application.

In this chapter, we reserve the term hypermedia for those applications that allow users to forge their own nonlinear paths through images, sounds, and text.

A Note About Terminology Used In This Chapter

One of the problems we all confront when writing about hypertext is its unformed lexicon. Fortunately, the term "authors" works for the creators of both hypertext and hypermedia applications. But what do you call the person who browses these products? "Reader" works well for one who uses a hyperdocument, but does not seem to apply to someone blazing a trail through a multimedia extravaganza that includes moving pictures and sound.

In this chapter, we will use the term "viewer" for a person "reading" a hypermedia document. Unfortunately, this term connotes passive couch potatoes who watch what goes by without influencing it. We attach no such limitations to a hypermedia viewer. Like hypertext readers, hypermedia viewers must be given the freedom to follow their own noses through the hypermedia web. Another problem is that the term viewer does not work well when discussing interaction with a sound passage. Again, lacking a better term, we must settle on "viewer."

Hazards and Rewards Of Hypermedia

"The idea of branching movies is quite exciting. The possibility of it is another thing entirely."

[Nelson, 1975, p. DM44]

Adding pictures and sound to a hypertext can make the application much more expressive. Browsing a hypermedia is exciting because it’s the opposite of the

passive act of watching a video or TV show. However, hypermedia applications can also overwhelm and confuse viewers. The larger the number of different media you use, the more careful you must be to place them in a clearly defined context and structure so as not to startle viewers.

Another problem is that the complexity and expense of authoring hypermedia

applications increase exponentially with the number of devices used. Throwing a graph or two into what was formerly a strictly textual hypertext is technically easy to achieve and easy for viewers to follow. But adding a nonlinear sound track and

full-motion video pushes the development costs per second up to figures approaching those required to produce a television commercial.

In order for a hypermedia application to succeed, the images and sounds must be so smoothly integrated into its fabric that viewers don’t have to stop to worry about whether any particular node consists of text, graphics, motion video, audio, or calls to a database report or spreadsheet. Authors, on the other hand, need to be fully cognizant of the complexity to be mastered when producing a hypermedia application. Hypermedia authors need to understand the added development and production cost of sound and video hypermedia before they tackle either medium. They must also understand the costs in terms of disk real estate and video and audio hardware that those in the target audience must purchase in order to view hypermedia applications.

In this chapter we will describe the advantages, costs and pitfalls that ensue when sound, stills, and moving pictures are added to hyperdocuments. The chapter is divided, roughly, into two parts. In the first part, we investigate the multimedia components of hypermedia.

Use of these diverse technologies can make a hypermedia application great, or drag its developer to the depths of despair. In the second part of this chapter, we discuss the issues hypermedia developers face in designing and implementing hypermedia applications.

The Media of Hypermedia

Five major categories of media can be incorporated into a hypermedia application – text, still pictures, moving pictures, sound, and other computer programs. Each medium offers its own advantages and poses unique problems.

The joys and sorrows of converting text into hypertext are discussed extensively elsewhere in this book. In Chapter 22 by Littleford, techniques for integrating AI and non-AI-based programs into hyperdocuments are suggested. Therefore, in this chapter, we will concern ourselves with the practicalities of using still pictures, moving pictures, and sound in hypertextual applications.

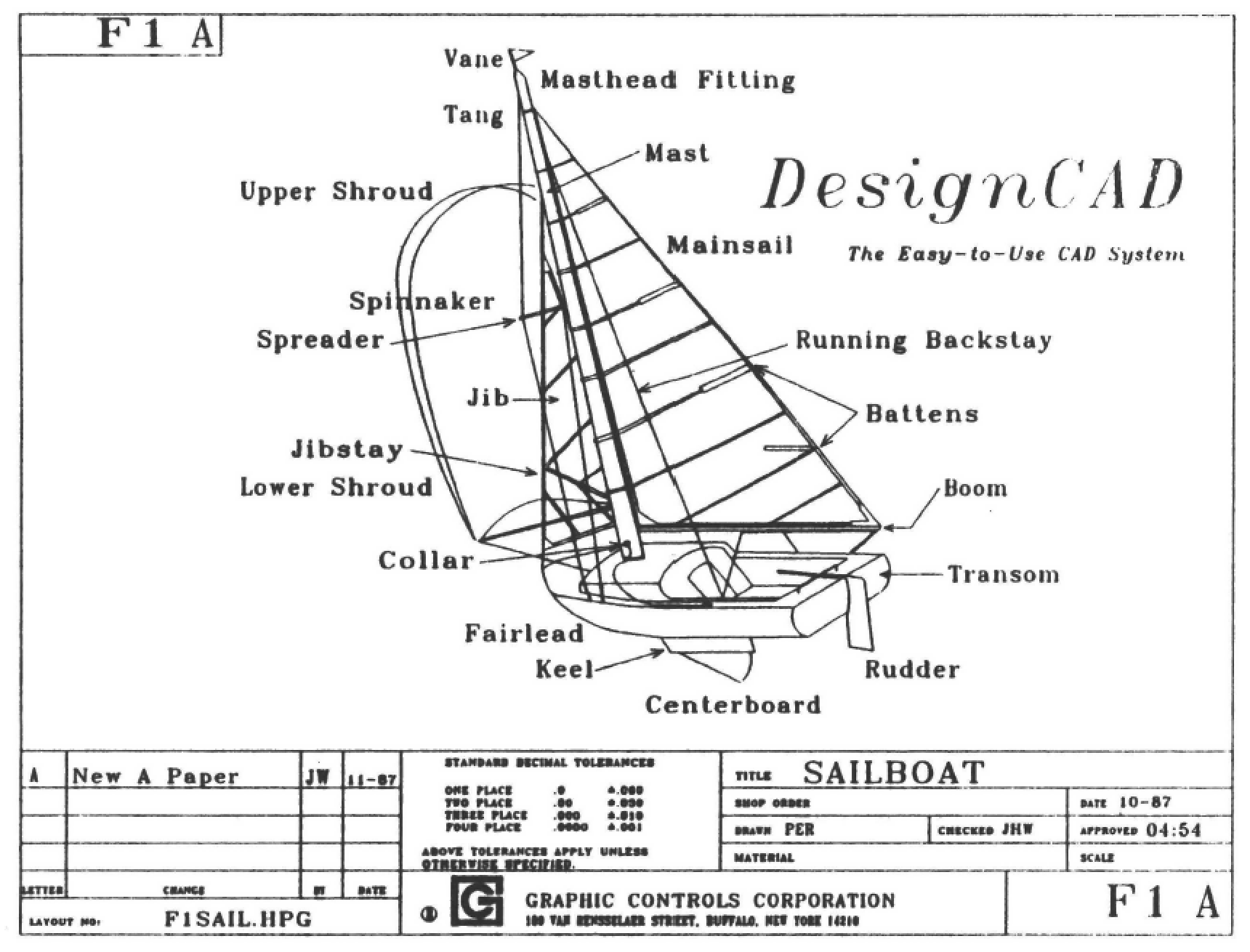

Computer-Generated and Computer-Assisted Graphics

Still Graphics

"Pictures are indeed an information mystery. They may be worth a thousand words, they may not be. But, incredibly, they require a thousand times as many bits. So perhaps they had better be a thousand times more valuable!"

[Lucky, 1989, p. 29]

Adding still pictures to a hypertext is the easiest way to make the leap from hypertext to hypermedia, but that does not mean the transition is easy. One problem is the sheer number of graphics formats currently in vogue. For example, major graphics standards currently popular on IBM-personal computers include Postscript (EPS), Lotus 1-2-3 (PIC), Publisher’s Paintbrush (PCX), SYLK, TIFF, Windows Metafile (WMF) and Windows Paint (MSP), Graphwriter II (CHT and DRW), graphic metafile (CGM and GMF), GKS (Graphical Kernel System), DXF and IGES.

There is not room in this chapter to discuss the relative strengths and weaknesses of each graphics format. Suffice to say that each hypertext development and delivery platform obeys a different set of graphics conventions and imposes its own limitations on graphics quality and number of colors.

Fortunately, many hypermedia authoring systems come complete with built-in graphics creation and editing tools. These built-in tools may save the poor developer from having to worry about the format and source of pictures, but they also

limit the range of images developers can use. In the end, most developers take the plunge and master the art of bringing illustrations in from outside sources, including business graphics programs, paint and draw programs, CAD programs, or scanners.

Animated Graphics

Animation provides an interim step between still images and full-motion video. Computer-generated animations are considerably cheaper to produce than full-motion video. In many cases, animations can be created by a single artist using a microcomputer. Shooting live video usually requires the services of both a film and sound crew as well as a dedicated studio to edit the video and to convert it to a format that can be received by the computer.

The costs of creating an animated sequence vary with the complexity of the images provided and the number of frames (screens) displayed per second. Some times, a very simple animated sequence updated only a couple of times per second can be very effective. For example, creating a simple stream of images depicting the unwinding of a DNA molecule is easy and provides visual information no still photograph or motion video could rival.

Video

"…[W]e have to devote about 100 million bits per second to create a typical television picture on your TV screen. But then you sit there in front of that bitwise voracious display and ingest only your meager few dozen bits per second.

[Lucky, 1989,p. 30]

Full-motion video is nothing more than a series of still pictures flashing across a screen at 30 frames per second. Sounds simple enough, but that is an awful lot of information for a computer to process.

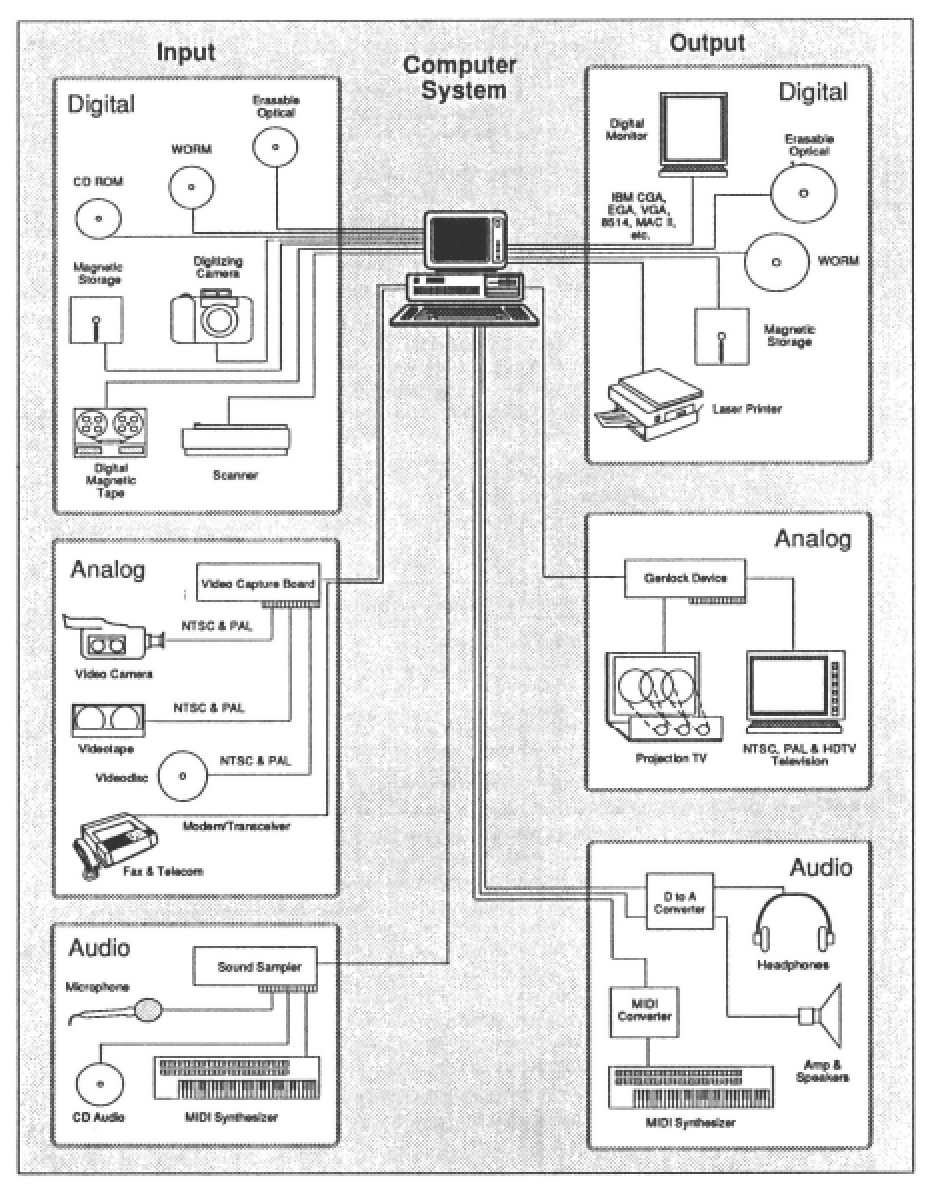

The problem is complicated because video can be brought into the computer in either of two entirely incompatible formats (analog or digital). Nor is it usually possible to store analog and digital video on the same storage device or to bring them into the computer over the same cabling scheme.

Analog video, like the video displayed on a television set, is made up of continuous lines of colors (or gray) that are displayed and then vanish one after the other as they are replaced with new lines. Digital video is composed of discrete dots which are displayed, a screenfull at a time, on a computer (digital) monitor.

Delivering Analog Video

It is not usually possible to store analog video on digital devices such as magnetic hard drives. Instead, analog video can be input to a hypermedia system using devices such as VCRs, videotape players, video cameras, and video still cameras. However, these devices are not able to access video frames out of sequence. In order to get to frame 100 on a videotape, a VCR must play all 99 earlier frames in order. Thus, we say that these analog devices are not random access devices.

One of the few analog media that computers can access randomly is the video disk. Therefore, analog video sequences to be integrated into a hyperdocument are almost always stored on videodisk.

Videodisk is an analog form of Read Only Memory (ROM). Once a videodisk has been created {pressed) at a mastering facility, like a phonograph record, it cannot be altered. A new kind of analog optical disk memory, called OMDR, is now available from Panasonic, Sony and Teac. OMDR is a WORM (Write Once, Read Many times) format; each frame on an OMDR disk can be written once at the user site. Once a frame has been written on OMDR, it cannot be altered or deleted; however, writing to one frame of OMDR does not preclude writing to other frames later.

Videodisk formats vary, but usually each track on a disk stores one frame of video information. Since television quality video usually plays at 30 frames per second, the 54,000 tracks that can be stored on each side of a videodisk yield about 30 minutes of television-quality video. It takes a videodisk player between one and three seconds, depending on the player, to find the first frame in a sequence. After that, the videodisk can display the frames in the sequence at the rate of one every thirtieth of a second.

Digital Video

Digital computers can turn analog devices on and off, or create windows in which to display an entire analog image, but the amount of processing a computer can perform on an analog image is severely limited. It is relatively easy for a computer to adjust colors in digital images, to crop and size them, or to mix several digital images (any combination of text, video or image) together.

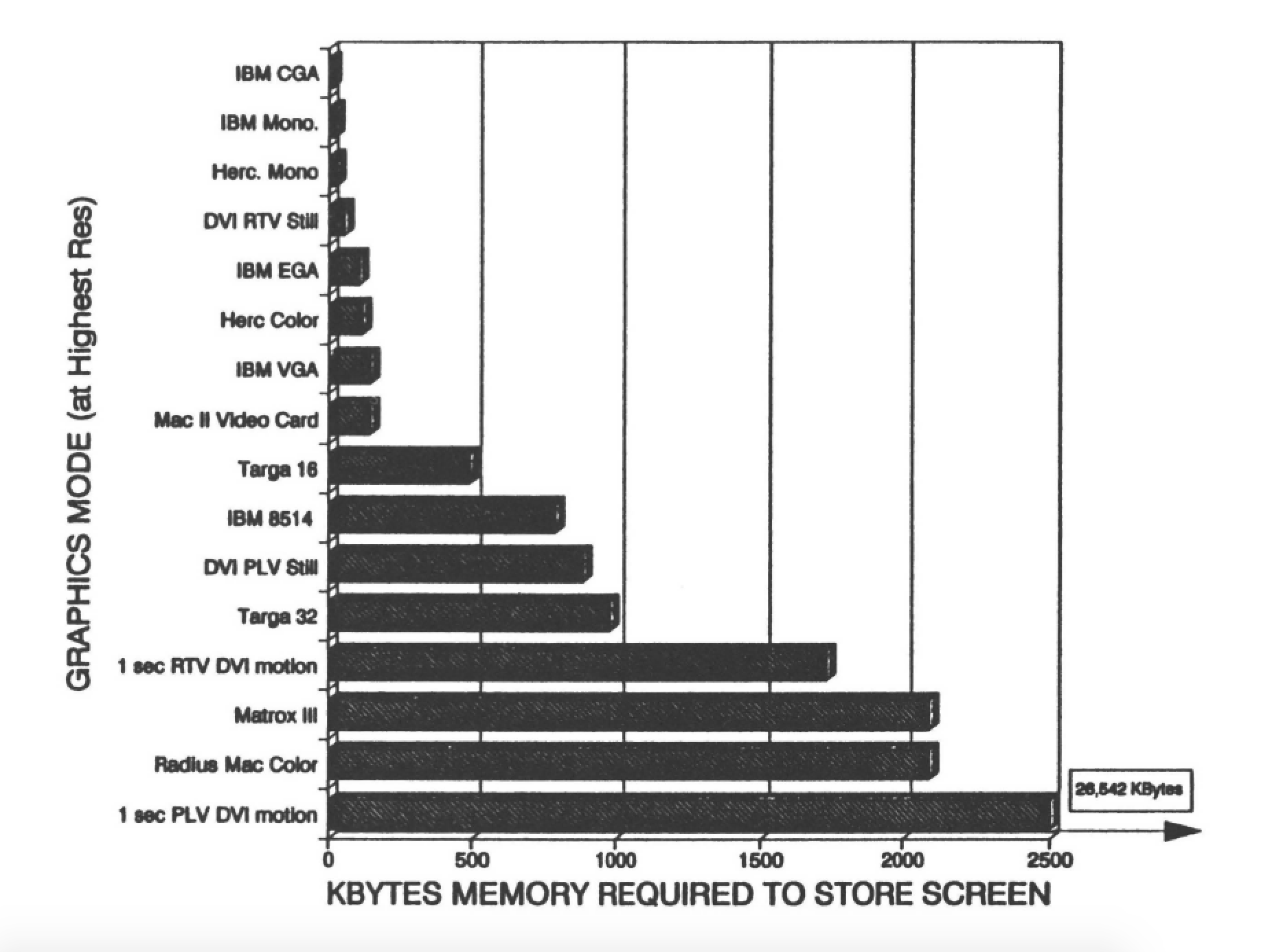

Digital video frames are made up of discrete dots, called pixels, each of which is a combination of three colors-red, green and blue. The number of colors that can be displayed on a digital monitor is determined by the color depth – the number of bits used to define the red, green, and blue characteristics of each pixel. For example, the color depth of a Hercules-compatible (black-and-white) screen is 1.

Only one bit of information is needed to display a pixel on a black-and-white monitor, with 1 meaning the pixel is white and 0 meaning the pixel is black. A monitor that can display 16.7 million colors needs at least 24 bits per pixel. There is a whole host of devices capable of capturing and storing digital images. Prominent among these devices are digitizing cameras, digital scanners, and modems. Digitizing boards can also be used to translate analog images into a digital format (they freeze the continuous, analog lines of color and then translate them into discrete pixels).

Delivering Digital Video

Digital images may be relatively easy to process, but they do chew up an awful lot of storage space. Say, for example, that a single digital video frame consists of an array 512 pixels across by 480 pixels down. If each pixel required 8 bits for each red, green, and blue component, the video would take up about 3/4 million bytes per screen. Multiply this number by the 30 frames per second required to generate a television quality moving image and the storage requirement is mind-boggling.

A 60-second commercial shown in this resolution would take up more than 1000 million bytes of hard disk space. The voluminous quantities of data required by multimedia and hypermedia applications have spurred the popularity of high-capacity optical data stores such as CD-ROMs. CD-ROM is a read-only, digital, computer- readable form of the popular CD-Audio medium. One CD-ROM can hold 540 Mbytes of digital data. [Miller, 1986]

The data that can be jumbled onto a single CD-ROM may include digitized video, audio, bitmaps, and other computer graphics, text and computer programs. Unfortunately, the data transfer rate of a CD-ROM drive is only 150K bytes per second (a fraction of the speed of the average magnetic hard drive). This slow data transfer rate is the cause of the noticeable delay one must expect when calling data up from a CD-ROM.

The data transfer rate also makes displaying full-motion video stored on CD-ROM difficult. We discuss some of the fancy footwork required to retrieve the 30 frames per second required for full-motion video later in this chapter. Other random storage devices that can hold digital images include magnetic disks, WORM, and read/write optical disk.

Mixing Analog and Digital Video on the Same Screen

In general, analog video images can only be output to analog monitors, while digital video is output to digital monitors. Analog monitors are commonplace they include the NTSC televisions in the United States and the PAL televisions in Europe.

In order to combine computer graphics or computer-generated text on the same screen as analog video, a computer must be equipped with a gen-locking device. Gen-locking devices synchronize pulsed analog signals and discrete digital signals so that they can appear on screen at the proper place and time. Gen-locking capabilities can be added to computers by installing an overlay board, which also pre-combines the digital (text or image) and the analog picture for display on the same screen.

Sound

Analog and Digitized Sound

"Although the idea of hypertext that includes sound is greatly appealing, the actual implementation, and ongoing maintenance, of such complicated formats is very costly."

[Lucky, 1989. p. 210)

"There is a ratio of 1,000 to more than 10,000 in the information rate required to communicate language in the form of speech as compared to text. The number of bits on a compact disk for an hour of speech or popular music would suffice for the text of 3 Encyclopedia Brittanicas."

[Lucky, 1989, p. 240]

Like video, audio comes in both analog and digital flavors. Record players and tape cassettes store audio in an analog format. CD-audio (compact disks) and DAT (digital audio tape) store music in a digital format. A standard CD-ROM or CO audio disk can accommodate up to about 72 minutes of audio.

Sound itself is transmitted through the air as an analog signal (sound waves). Microphones take sound in as an analog signal; speakers send it out again as an analog signal.

The reasons for digitizing sound are similar to those for digitizing graphics and video. Digitized sound can be processed by computers in ways that are just not possible when the sound remains in a analog format. Digitized sound takes up almost as much space as digitized video, and, like video, can be compressed to take up less space on the storage medium. The device used to translate analog sounds into a digital format is called a sound digitizer (or sampler).

Synthesized Sound

Instead of digitizing real sounds, the hypermedia author can generate sound on the fly using specialized chips. Speech can be reproduced from either an ASCII text representation or a list of the phonemes. Current inexpensive technology permits a reasonable representation of a human voice, albeit usually one that has a pronounced accent.

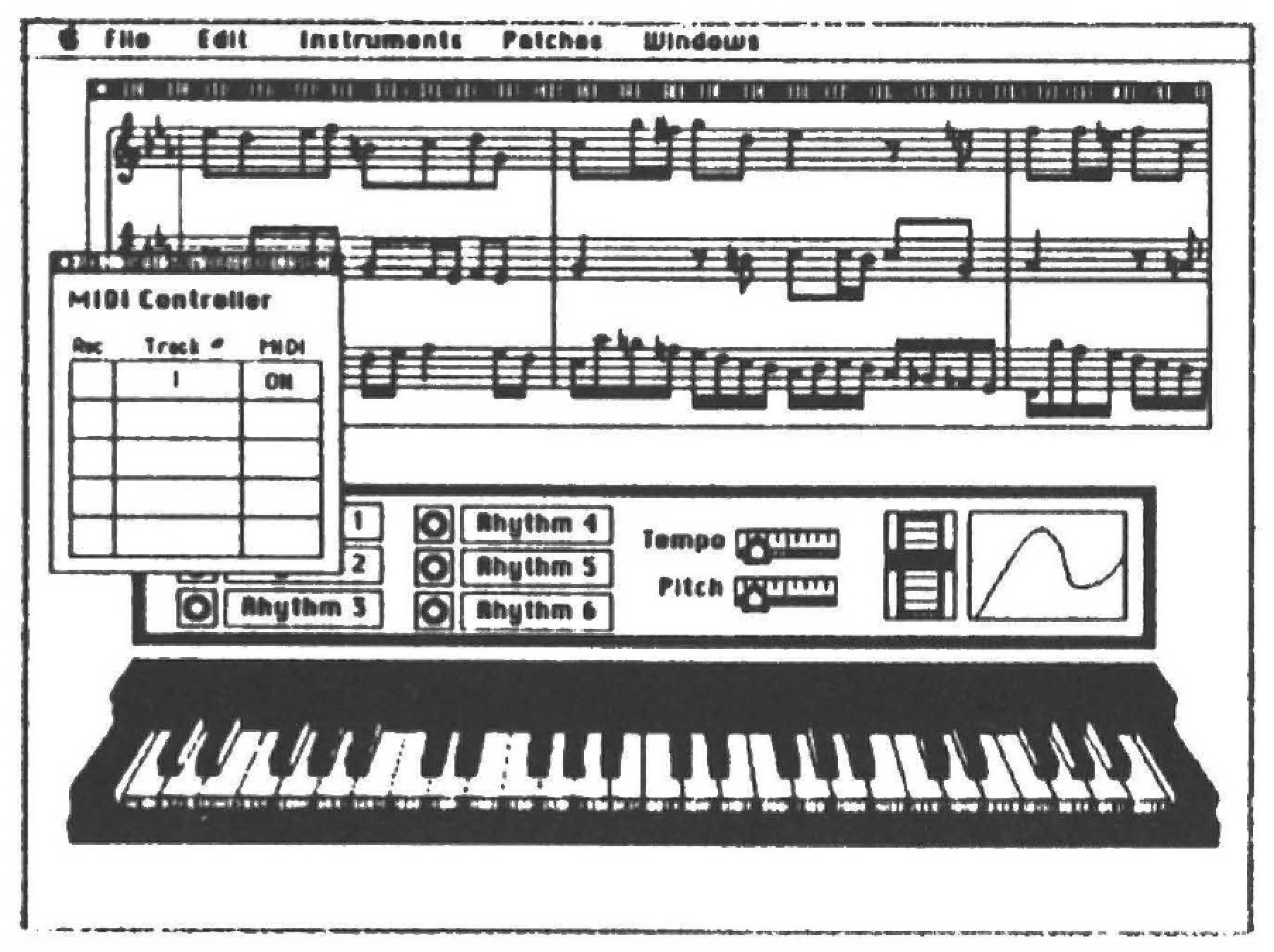

Musical synthesizers create digital music and other sounds.

One advantage that a sound producer has over still pictures and video is that there is a single standard-MIDI-for the dissemination and processing of sound that has been adopted worldwide. The MIDI format allows sounds to be generated on a synthesizer and stored and processed on a computer. MIDI can drive a musical synthesizer which reproduces the music with the desired tone and timbre. A MIDI-compatible sound-generating peripheral is usually required to create the sounds so specified; these devices are becoming widely available both as output and input devices.

Techniques That Make Multimedia Possible

Compression

"Mere compression, of course, is not enough; one needs not only to make and store a record but also to be able to consult it …. Compression is important, however, when it comes to costs."

[Bush, 1945]

Video Compression

As we have seen, storing enough information to create a 30 frame-per-second (fps) video can take a tremendous amount of disk storage. Transferring all that data from CD-ROM to screen RAM fast enough to generate 30 frames-per-second requires a staggeringly fast data transfer rate of 22 Mbytes per second. (It would take an average CD-ROM player over an hour to play back 30 seconds of video stored on its shiny surface). One solution to the storage and data transfer problem is to compress video images so that each frame takes up less space on the CD-ROM and so there’s less data to be moved.

Most decompression schemes work on the fly-after data has been transferred from the digital store (CD-ROM or magnetic hard drive) to the computer screen. But even at a compression rate of 80%, a five-minute-long sequence that will run at 30 frames per second can take a good long time to transfer and display [Berk, 1990]. Thus, although the capacity of a CD-ROM is 500 times that of a floppy disk, hypermedia applications devour both CD-ROM real estate and CPU and graphics processing power at an incredible rate. In hypermedia, no matter how much performance and space we get, we need more. Lossless compression techniques, which guarantee that none of the information originally obtained is omitted from the original during the compression/decompression process, can only achieve a 1:2 or 1:3 compression ratio when applied to video information.

Higher ratios of compression are attained by sacrificing some image quality. Details which are only slightly discernible by the human eye can be eliminated without weakening the impact of the picture. The required data transfer rate can also be lowered by decreasing the resolution of the picture, slowing the rate at which frames are changed, or some combination of both.

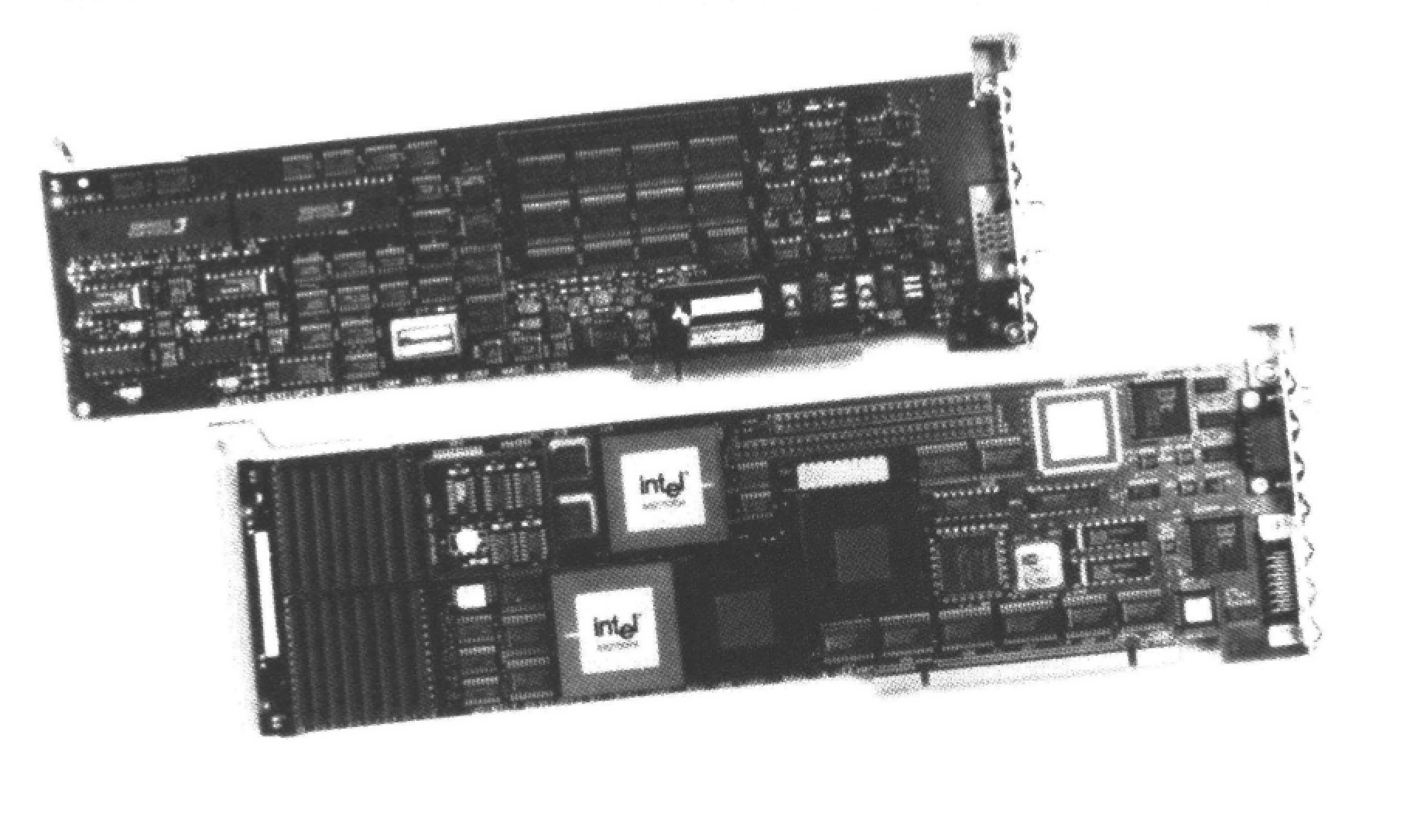

There are several commercial systems that compress video data. All barter image quality for space. They include Intel’s Digital Video Interactive (DVI) and Philips’ Compact Disc Interactive (CD-I). DVI requires extensive preprocessing of the video signal and currently yields 30 frame-per-second video sequences at a relatively low image quality. CD-I currently does not support full screen 30 fps video, but promises image quality of decompressed CD-I sequences that is some what higher than those running through DVI decompression chips.

Almost all compression schemes used today are proprietary, and thus incompatible with each other, but most take similar approaches to increasing compression

ratios. Images are normally recorded in RGB (Red Green Blue) coding, where each pixel is defined as a combination of those three colors. Compression algorithms usually convert the RGB codes to YUV. (This conversion can be accomplished using a relatively straightforward mathematical equation.) The Y value contains the luminance (brightness) and the U and V values contain the chrominance (color) values. Because the human eye is more sensitive to differences in brightness than color, the U and V values can be compressed to a greater extent than the Y values, yielding about a 3:1 compression. Cutting the resolution in both directions by 2 achieves another 4:1 decrease in size.

Interframe correlation compression technique can also be applied. That is, the compression algorithm determines how each frame in a sequence differs from the one before it, and only stores the information that has changed between frames. These types of base/delta compression techniques can achieve up to 1:10 or 1:15 compression. Choosing to display smaller frames or to run sequences at slower frame rates can decrease the data space requirements even further.

A new class of compression chips based on an international standard called Joint Photographic Experts Group (JPEG) have recently begun to hit the market. A related international standard video compression scheme called Moving Pictures Experts Group (MPEG) is also in the works. The first MPEG compression chips are expected to be sampled sometime in mid-1991 with commercial products emerging by 1992. By that time, Intel will probably have incorporated MPEG compression standards into its line of DVI chips.

Still pictures can also be compressed. However, because people can more easily detect glitches in still frames than in frames displayed in sequence at 30 frames per second, lossless compression is more commonly applied to still images.

Other techniques such as the CCITT Group 3 or 4 Fax formats provide good compression of black and white images. Run length encoding is also good when there is a large number of unvarying lines (all black or white). Both methods can be combined to give approximately a 5 to 1 compression ratio on black and white still images.

Table 5.1

| Motion: | Stills: | |

|---|---|---|

| CD-I: | Up to 72 minutes on CD-ROM with partial screen, partial motion, 120-by-80 pixel resolution (this requires 5 to 10 KB per screen). | 8000 still images at a maximum of 720 by 480 pixel resolution. (108 KB to 300 KB for 1 full screen). |

| DVI: | Up to 144 minutes partial screen full motion, 256-by-240 pixel resolution video per CD-ROM. Up to 72 minutes full-screen, full-motion, 256-by-240 pixel resolution video per CD-ROM. Up to 16 hours 1/8 screen 1/2 frame rate 256-by-240 pixel resolution video per CD-ROM. |

5000 stills recorded at 1024 by 512 pixel resolution. 7000-10000 still images at high resolution. 3500 still images with 20 seconds of audio per still. 40,000 medium resolution still images. |

Sound Compression

As in video, hypermedia authors can trade audio quality for disk real estate. Everything from CD-audio quality to AM radio quality (without the static noise) sound can be achieved by tweaking sound compression algorithms and compromising on quality to save on storage.

A CD-ROM’s 150 kilobyte-per-second data channel can be used partly for transferring the audio and partly for transferring other data, such as accompanying images. Alternatively, several sound tracks in different languages can be recorded for a particular image or portion of a program.

Introduction to the Design of Hypermedia Applications

[T]oday’s systems for presenting pictures, texts, and whatnot can bring you different things automatically depending on what you do. Selection of this type is generally called branching. (I have suggested the generic term hypermedia for presentational media which perform in this (and other) multidimensional ways.) A number of branching media exist or are possible:

- Branching movies or hyperfilms…

- Branching texts or hypertexts…

- Branching audio, music, etc.

- Branching slide shows.

[Nelson, 1975, p. DM44]

Table 5.2 CD-I and CD-ROM XA Audio

| Type | Storage requirements | Notes |

|---|---|---|

| Level A Stereo | 1/2 hi-fi audio | (only for CD-I not CD-ROM XA) |

| Level B Stereo | 1/4 | |

| Level C Stereo | 1/8 | |

| Level C Mono | 1/16 | Up to 16 hours per CD-ROM. Good for speech. 1 second – 10KB |

The first efforts at applying a new technology are often modeled on strategies used in old technologies. Not surprisingly, many hypermedia applications use the computer as an expensive emulation of paper. Don’t assume, however, that the primary focus of a hypermedia application has to be text – like a book with built-in movies. Many hypermedia applications have made successful use of video or audio as the primary medium and use text only to supplement the experience (e.g., with help screens, subtitles, and footnotes).

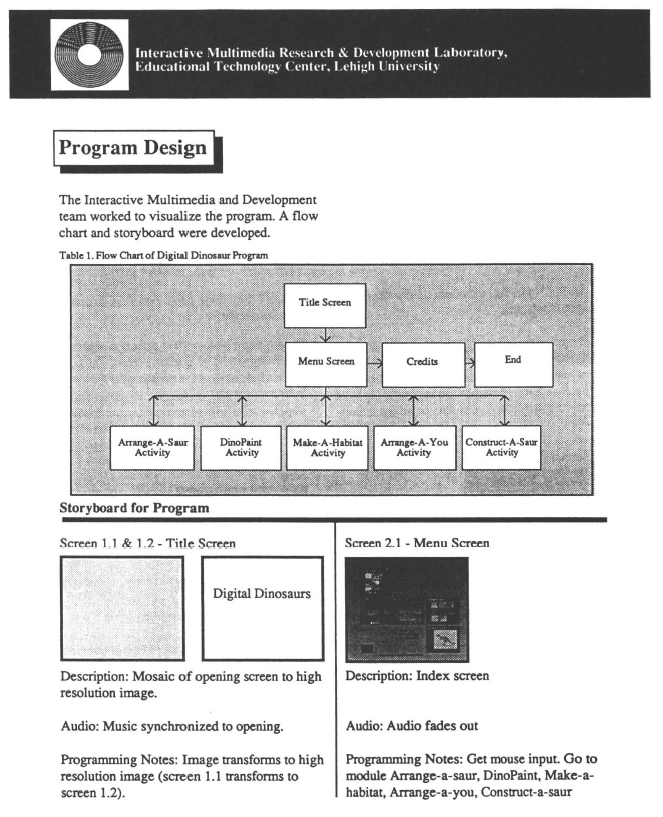

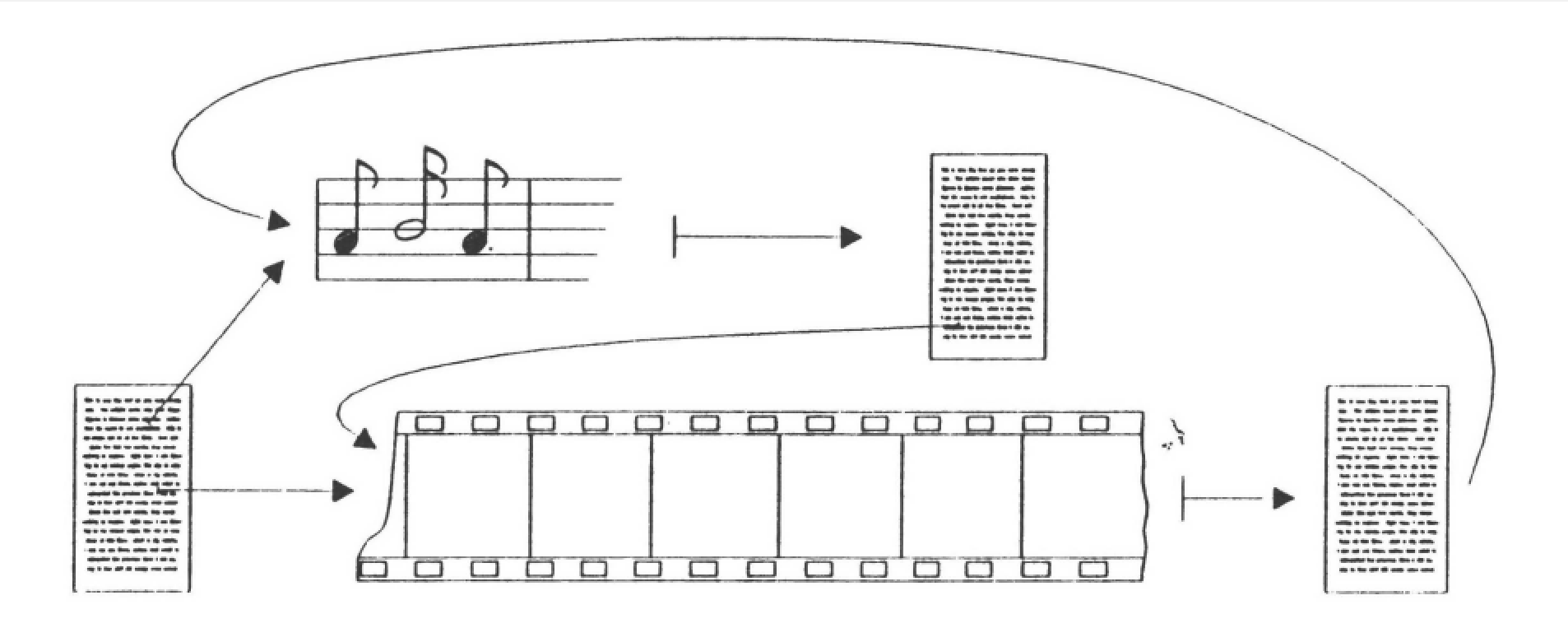

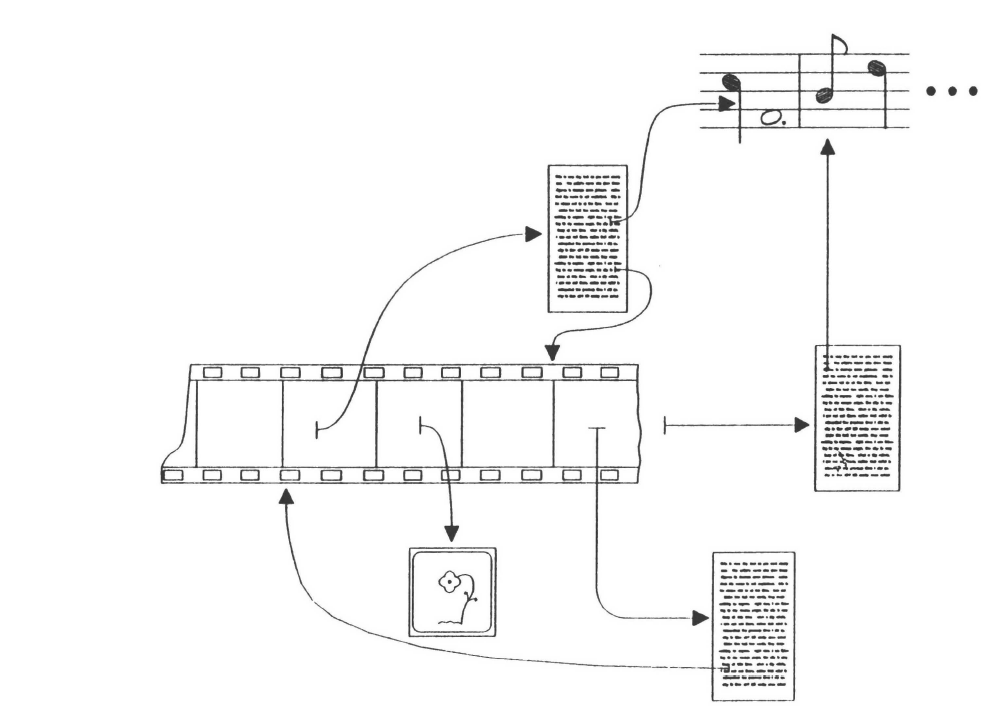

The creation of a hypermedia system starts when choices are made about how to treat the material. The first step is to study the target audience and determine its level of sophistication. Next comes the creation of a storyboard (a general outline which defines the possible sequences the hypertext will provide) or a map of all the potential nodes of the material and how they are interrelated. Since nodes are likely to be linked in a nonhierarchical fashion, the map should include textual or graphical depictions of what nodes will be reached by what links. For a simple web where nodes follow each other in a nearly sequential pattern, the map may not be very complex. Other webs may require more complex maps.

Consider, for example, a hypermedia application that describes how our muscles work. The opening screen of the work might start with a video clip of a muscle in operation with a voice-over describing the processes involved. Various links might be provided allowing viewers to stop the video to call up textual descriptions of the terms used in the voice-over. From these descriptions the viewer can be provided with a choice of returning to the video where they left off or calling up another video sequence that demonstrates other related processes.

In the remainder of this chapter, we will highlight some of the many issues hypermedia authors must address in designing and implementing applications.

The Unique Challenges of Producing Hypermedia Applications

Put sound, video, text and stills together and you have multimedia. Toss in some hypertext links and you have hypermedia. Sounds easy, doesn’t it? But the authoring challenges of hypertext pale in comparison to those that face the hypermedia author. The temporal nature of video and audio presents the hypermedia author with a unique set of editing and production problems, including:

- Facilitating Navigation in Hypermedia

- Controlling the Pace of a Hypermedia Application

- Linking To and From a Moving Image or a Changing Tone

- Empowering Hypermedia Viewers

- Production Quality

- Synchronization of Media

Facilitating Navigation In Hypermedia

Readers can get lost in hypermedia space at least as quickly as they can in hyper(text) space. In fact, it is often much easier to get lost in a hypermedia application because conventions for navigating video, pictures and sound are necessarily more complex than navigating text alone. Among the toughest problems hypermedia authors face is providing readers with useful and complete maps of the various pathways that can be followed through the multimedia hyperbase.

In hypermedia, as in hypertext, making the structure of the hyperbase explicit is one powerful aid to navigation [see Parunak, "Ordering the Information Graph" (Chapter 20), in this book.] Other suggestions for authors seeking to minimize viewers’ disorientation as they browse through hyperdocuments are provided in the chapters by Gay & Mazur and Bernstein.

Pacing

-

Granularity.

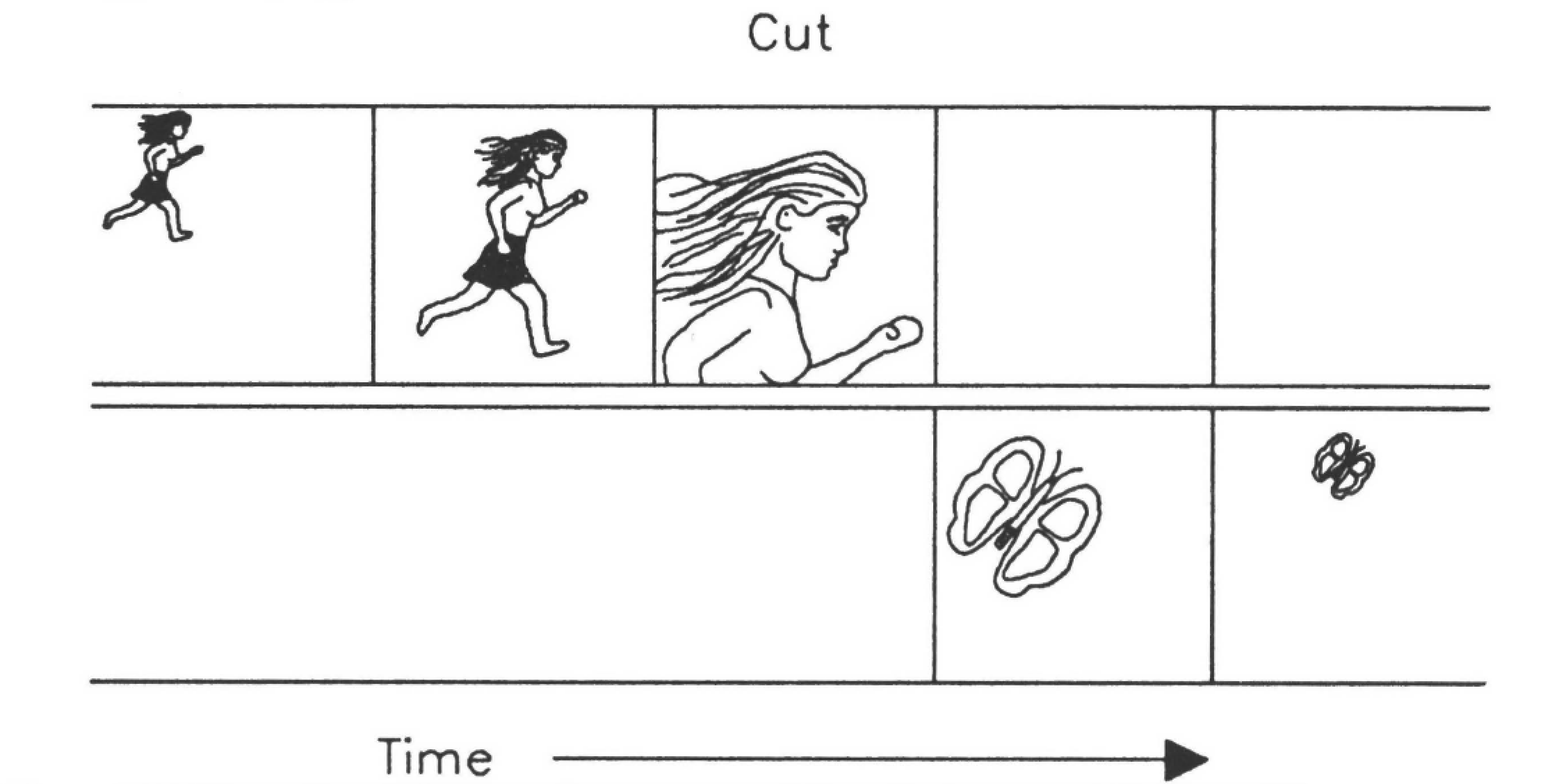

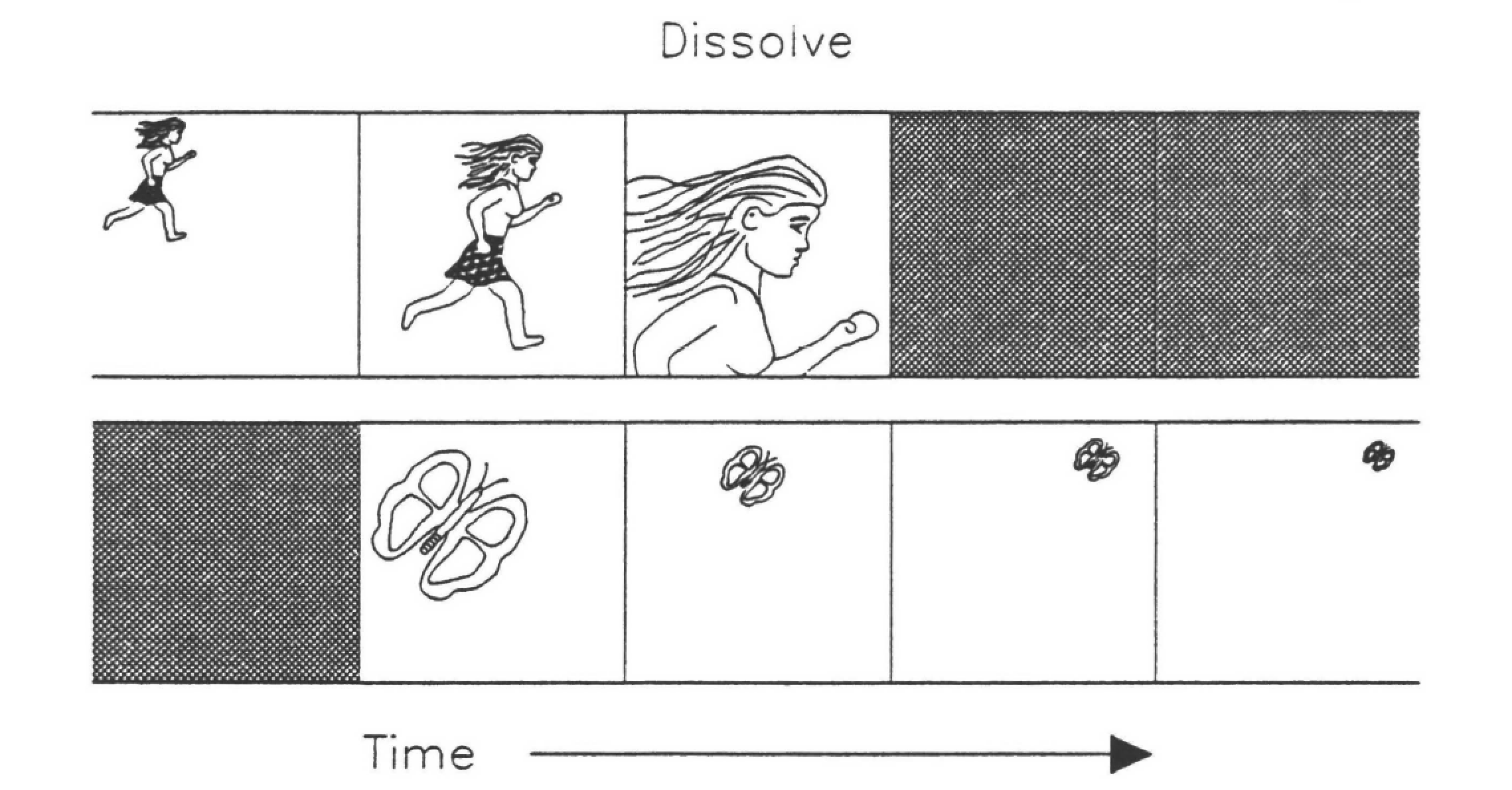

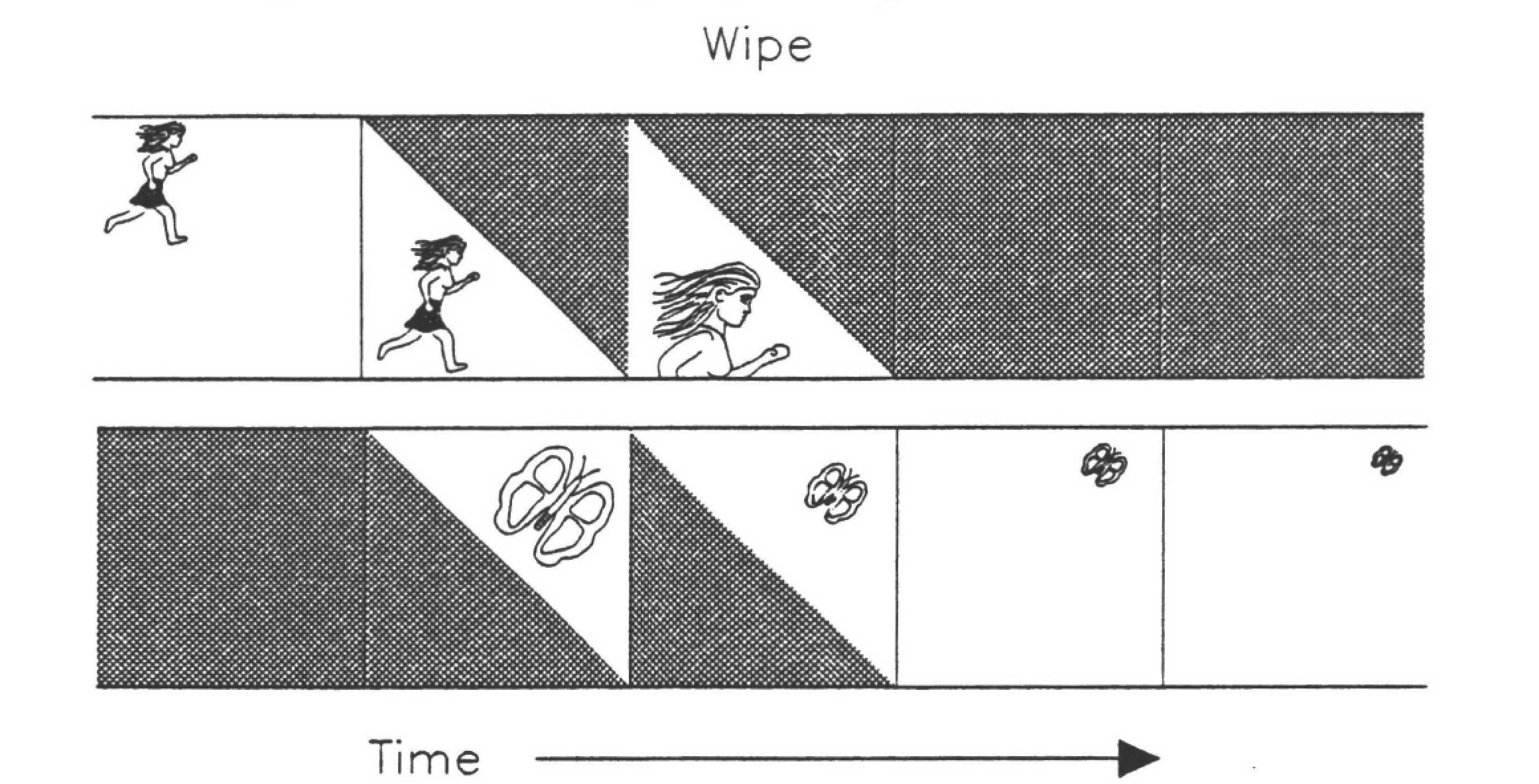

A movie director controls the pace of a film by determining the length of the sequences within it and by using different transitional devices (such as fades, wipes and cuts) between those sequences. A series of short, choppy sequences, like the editing in the old Laugh-In television show, moves the story along in a sprightly up-beat fashion. Longer, slower sequences can be more thoughtful.

Transitions can be sudden – in a cut, sequences start and end abruptly and without warning. Or, transitions can be more gradual. In a dissolve, frames at the end of the first sequence and the beginning of the second are superimposed, so the viewer has time to think about the transition being made. On the other hand, a dissolve may move the viewer to a new sequence more efficiently than a cut. In a wipe, some portions of the screen display the end of the first sequence, while other portions display the beginning of the next sequence for a while [Philips, 1988].

Say a character in a movie is seen getting into his Maserati to return from his office to his palatial mansion. Rather than shoot footage of the drive between these two places, the director might film: the character getting into his car, cut to the character driving away, then cut to the driveway of the mansion where the footman is standing ready to help the hero park his car. Or, the director might speed the pace by dissolving on a shot of the character getting into his car, then fading back in on our hero purposefully entering his front door.

In a hypermedia production, the author has less control over pacing – the reader may try to insist on watching the road as the character drives home. However, limitations imposed by the capacity and resolution of the storage medium and by the author for artistic, economic, or didactic reasons will constrain the quantity, quality, and volume of sound, video, and graphics in any hypermedia application. Therefore, the author must decide up front which 15-second "sound bites" are sufficient to convey certain information, and whether other sequences require two or three minutes. The author will determine, by providing links, where more specific information is available and what form that information takes.

Like a movie director, a hypermedia designer should plan each sequence in the application. Many hypermedia designers use storyboards similar to the ones used by other multimedia professionals from movie directors to software designers.

In the storyboard, the author might impose a hierarchy of larger segments, composed of smaller segments, so that readers can choose which level is appropriate. Or, the designer might decide to build in pause, stop, fast-forward and back-up links to make it easier for readers to control the flow of the video and/or audio. -

Flow.

Related to granularity is the issue of flow, especially between different segments of video. Professional video and film editors take great pains to ensure that jumps between shots are as smooth as possible. A poor transition can be disorienting for a viewer. In a hypermedia application, viewers are in theory going to be jumping in and out of different video and audio segments at will. The application developer must manage these transitions carefully, maintaining a feeling of continuity while not limiting readers’ flexibility. Since a reader might "cut" between scenes in a totally unpredictable order, it might be hard for an author to decide how to implement transitions between nodes. A hypermedia author can specify which transitional device is to be invoked when a viewer selects a particular link. HyperCard HyperTalk programmers, for example, can include visual effect commands that cause a card’s appearance on screen to be accompanied by a special effect such as a dissolve or a wipe.

Linking

How does the hypermedia author set up a coherent set of links that allows readers to get to the massive amount of information stored in the moving video images (e.g., the setting, the objects within the image, the context of the action)? This is a challenge even in simple hypertexts; the fact that nodes cannot all be assumed to be text-only makes hypermedia linking more difficult. Linking into and out of moving video and changing audio sequences is particularly tricky.

Where and How To Leap Into the Video and Audio Flow

One of the problems of allowing hypermedia viewers to link into a moving stream of pictures or sounds is that you must take the element of time into consideration when placing the link. In some instances, linkages wed to particular moments in the stream make sense (for example, you will almost always want to provide a link to the beginning of a famous speech). At other times, you might want to allow viewers to enter the video or sound stream at any frame they wish. In many cases, once a sound or video node has been accessed by the viewer, it makes sense to allow the video or audio stream to play sequentially from beginning to end, but to allow the viewer enough control to cut the sequence short at any time.

If you do allow viewers to jump into a video sequence in the middle or to control the speed of video display, difficulties will occur if the compression schemes used to save storage space is based on a Base/Delta scheme. Skipping ahead in the sequence may not be possible or may result in an unacceptable time delay. Some compression schemes (such as DVI) allow authors to insert absolute frames at specified points in the video stream. You must plan these and program them in. You could, for example, provide entry into the video stream at half-second intervals, by inserting a base frame every fifteenth frame. Of course, inserting all these entry nodes incurs costs. For example, with DVI each absolute image node requires roughly three times as much data as a frame accessed sequentially.

Another problem is that each time you search for the beginning of a new video sequence, most storage devices will take a while to find and/or decompress it. For example, many videodisk players take three seconds to call a videodisk sequence to the screen. Obviously, with delays of this magnitude, the number of discrete calls to video should be kept to a minimum.

Authors can link between dynamic objects such as audio or video nodes in several ways:

- Each sequence can be treated as a discrete node. The links from a sequence would only occur at the end of the sequence. In this case, once a video or audio node is selected, the entire sequence would be played, and the user would have no chance to interrupt. This is acceptable when the audio and video sequences are small, or when there are certain nodes that the author wants played all the way through every time they are accessed. Consider a worst case scenario in which a user links from a biography of Beethoven to a node which plays a non-interruptible version of his Fifth Symphony. More than 30 minutes would elapse from the time the listener selects the Fifth Symphony until user-control returned. In general, readers will expect at least the opportunity to stop a video or audio node (see Empowering Hypermedia Viewers.)

- An alternative scheme is for the developer to treat each video or audio sequence as a set of nodes. This design methodology provides more opportunities for users to make choices within each video sequence.

Each smaller node might itself refer to a sequence of other nodes, and would contain appropriate links to these other nodes. If the viewer lets a sequence play all the way through, the author will have specified the node to end on-this could be a freeze on the last frame of the completed sequence, or it could be a different node entirely.

Making Links Explicit.

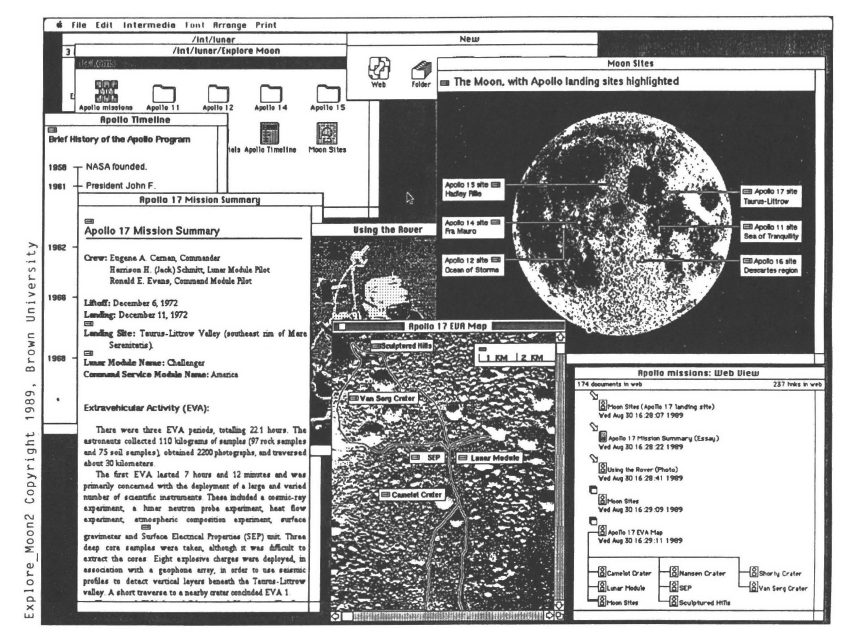

In stills, such as the pictures of the moon’s surface in Figure 5.15, it may be very effective to overlay text over the graphic to serve as link anchors.

However, alerting viewers to the presence of a link from within a video is more

problematic. Warning viewers ahead of time that a link anchor leads into a multi media link is a very good idea but not always easy to accomplish. In Elastic Charles, a project at the MIT Media Lab, each link to a video clip is anchored by

a miniaturized version of the clip on the screen in a sort of moving icon (or micon). Tills approach works well in applications where the contents of a video sequence are so distinctive that users can recognize them by previewing just a clip. [Nielsen, 1989].

Audio nodes present another problem. It is easy to have an audio node as the

destination of a hypertext link, but more difficult to anchor links from within the sounds itself. The listener cannot click on the musical notes that come out of a speaker, so the developer must provide a visual representation of the sound information that provides appropriate opportunities for linking.

Links To and From Still Pictures

You should also adopt a consistent mechanism to let viewers know where links are on each still picture. Marking active areas on the screen can be achieved any number of ways. For example, active areas (link anchors) might be highlighted by coloring them differently than the background, by outlining them, or by using a three-dimensional effect that displays the image of the link anchor when the cursor is moved over an active spot on the screen.

Table 5.3 Different constraints govern the use of different media in hypermedia.

| Text | Video | Audio | |

|---|---|---|---|

| Content | Letters, words, sentences, paragraphs | Frames, segments | Intangible changes in pitch and volume |

| Organization | Static, application-dependent | Temporal (event) or spatial (place) | Temporal (event) |

| Meaning | In words and their context | In still image or in motion (context) | In change over time (context) |

| File Size | ~1 byte for each letter | 22MB/second | 10K to 75KB/second |

| Potential Anchors | Keywords, buttons, highlighted text | Keywords, static and dynamic buttons, micons (moving icons), regions within image | Keywords, static and dynamic buttons, visual surrogates for sound |

To see how such choices can affect a design, consider an on-screen picture of an airplane used as a reference in an electronic aviation repair manual. An obvious design is one which specifies that the viewer can click on any parts of the airplane to receive more information.

Given a high enough screen resolution, it would be technically possible to show hundreds of parts on screen and provide a link for each. Such an approach would tax the understanding and manual dexterity of anyone using the manual. A better design might be to divide the aircraft into seven areas (wheels, cockpit, passenger area, wings, engine, ailerons, and tail fin). Click on one of these areas and the picture zooms in on a close-up divided into another half dozen areas or so. Each zoom focuses in on a smaller and smaller list of items until the particular widget of interest is found.

Many of the other issues that must be considered in coming up with a good hypermedia design are the same as those considered in designing plainer hyperdocuments. For example, how does the author inform the viewer what the result of selecting a link will be? Will selecting a link take you to another picture, to a definition or description of the target of the link you click on, or to a menu of choices? How does the viewer return to a previously displayed screen or to the initial launching area of the hypermedia? Do you provide different types of anchors to indicate whether links target text passages, pictures, or sounds? Must text menus be scattered around the picture to provide additional guidance to the viewer?

It is easy to debate which devices work best in any given situation. In fact, it is often true that the actual mix of devices chosen is less important than the fact that a consistent approach has been adopted.

Adopting such a consistent approach allows viewers to make assumptions about what the result of each action is likely to be and saves them from stumbling blindly through the maze.

Links to Dynamic Nodes.

Just because a link is attached to one place in one frame of the video, doesn’t mean the position of the link can’t change in subsequent frames. The first frames could contain link anchors that appear in different places and link to different nodes than latter frames. These anchors should not move too rapidly or the viewer will have a difficult time selecting them.

Anchors may appear or disappear over time, depending on the contents of the video clip. An interview with Jean-Paul Sartre, for example, might make mention of his relationship with Simone de Beauvoir. A button would appear that would allow a reader to zoom off to get more detail on this relationship. The button, however, would disappear as the conversation with Sartre moved on to other topics.

A link’s target may also change based on the viewer’s responses. For example, when a viewer has explored all the nodes that emanate from a particular link, then the system might change the target of that link, as a mechanism to keep users from repetitively visiting the same nodes. Depending on how well this effect is integrated into the aspects of the application, it may either confuse viewers or promote greater efficiency in finding desired information by eliminating inappropriate or duplicate nodes.

An alternative is to place links from video outside the video frame. This is a particularly attractive option when video has been compressed using DVI or CD-I schemes, since the results are often better if full screen video is not required. Another advantage of this approach is that link anchors need not move around on the screen. Although moving the anchors may give viewers a better idea of the target they are selecting, they may also add to the busy-ness of the screen.

Empowering Hypermedia Viewers

The reader of a book has control over the speed and order in which material is presented. Using a hypermedia document should be a similar experience. For example, viewers should be able to skim or browse a hypermedia document as they would a document on paper and they should have the means to predict where in the hypermedia particular information is stored.

Granting hypermedia viewers control over the order of presentation is easy this is hypertext, after all. Response speed of presentation is harder to guarantee because it relies on the ability of the delivery platform to respond promptly to user input. For example, the 3-second access time of a videodisk player means that some delay in response may be unavoidable. Developers should strive to keep video segments well-organized and to a manageable size so that viewers can skip around easily if desired and yet still understand the material.

At the most basic level, viewers must be able to escape at any time. A hypermedia application should rarely, if ever, force a viewer to watch a two-minute video sequence to its bitter end. The viewer should at least have options similar to those on tape recorders and VCRs, including the ability to rewind the sequence to the beginning and replay it, preview it in fast forward, pause in the middle of the sequence, or stop it altogether and go on.

Production Quality

The content of television may not always be the best, but the shows (and especially the commercials) are slick. Users of hypermedia applications will have little patience for poorly produced video and audio. How can designers of hypermedia applications compete with the production standards of commercial television?

Television and movie production values may differ, but at least the resolution of the screens and the number of frames of video or film required are fixed. Designers of hypermedia applications have it much harder – they must make difficult trade-offs between sound and video quality and capacity. As we have seen, many of these choices have to do with the compression techniques the author is working with, and with the capacity and power of the target delivery system.

Synchronization

Video and audio must be in perfect synchronization. If the application involves text or animated graphics, in addition to video and sound, the challenge of synchronizing all the elements of the application becomes even greater. The Athena Muse application at the MIT Media Lab, for example, matches video interviews of native French speakers with one of two levels of subtitles-full text for beginning French students and keywords for more advanced students. Creating point-to-point cross-references between the video and the subtitles, all with reasonable display performance at normal conversational speeds, was not a trivial task [Hodges, 1990].

Production and Authoring Requirements

Costs

A book publisher needs to make a tremendous investment in word processing, typesetting, printing, and bindery equipment just to produce books; a book reader needs only the book itself. The same distinction holds for hypermedia – the production system and the delivery system will differ.

Producing a hypermedia application may require video production and editing, audio production and editing, graphics and animation production, and programming, digitization, possibly compression, programming, or at least authoring, mastering, etc. Not all these functions need be performed in-house by the hypermedia producer, but they must all be done and they all cost [Berk and Devlin, 1990]. None of these capabilities should be required of most hypermedia viewers.

Production Costs.

The cost of any multimedia production is inevitably higher than the cost of producing text. Producing motion video is the most expensive, sound comes next, still graphics is less expensive and text is usually the easiest to manage. You can cut down on the cost of multimedia by using an approach suggested by Tom Corddry of Microsoft: keep moving video to a minimum and instead use sequences of stills, as in a slide show, perhaps with audio voice-over. Not only does this cut the cost of production, but it also increases the amount of information that can be stored.

The cost of producing hypermedia applications can be high; they can run from the thousands to the millions of dollars. For example, a simple hypermedia application with about forty nodes, half of them video sequences, and forty links will cost about $45,000. This cost includes scripting and producing. Richard Michaels, of Learncom, a software training company, estimates that it will cost between $200,000 and $450,000 to implement an interactive training system without video sequences. Full-scale video-audio hypermedia productions can cost much more.

Tools

Tools for preparing hypermedia fall into a number of different categories. There are tools for editing the raw video and audio, callable routines for presenting various forms of multimedia, and authoring systems which incorporate the linking tools.

The spectrum of editing systems runs from painting programs which draw on stills, to graphics animators, to programs that identify and re-sequence graphical, video and/or audio sequences, to special effects generators and beyond.

Authoring tools and courseware generators may provide a flowchart-like interface and the capability to play different audio and video sequences based on certain conditions. These software products may help even those with minimal programming skills to define the flow of a hypermedia application. [See Berk and Devlin, 1990, for a more extensive discussion of the equipment necessary to pro duce multimedia segments for use in hypermedia.]

Cost of a Delivery System.

A hypermedia delivery system might range anywhere from a street model personal computer or Macintosh (which costs under $3000) to a computer network system with six videodisks and a video jukebox and specialized equipment for voice or music synthesis. Current designs of CD-I players predict a stand-alone configuration that will sell for about $2000.

Viewers of hypermedia applications may also need the following peripherals: a CD-ROM drive ($600 to $1200), high-resolution color display board ($300 to $2000), monitor for video images ($500 to $3000), analog video disk player ($400 to $1000), voice synthesizer ($300 to $1000), music synthesizer ($300 to $2000), video decompression hardware ($200 to $1000) and audio decompression hardware ($100 to $500).

Graphics, Video and Sound Editing.

A hypermedia authoring system must at least be able to display and link to two- or three-dimensional graphics and to play back sequences of analog or digital audio or video.

Many hypertext authoring systems provide some support for multimedia. Apple’s Macintosh combined with HyperCard 2.0 will provide access to hypertext authoring capabilities from the operating system level.

Authoring Systems.

In order to create a hypertext document, all an author need do, once the raw data (text) is available, is chunk it, edit it, and link it. The editing of a hypermedia document is a bit more complicated, because digital video and audio nodes are not usually available to the author in compressed, digital form until after they’ve been chunked and edited. This is because un-compressed video requires huge amounts of storage, but digitizing and compression is an arduous, expensive process that cannot be undertaken until particular sequences out of the raw video have been chosen for the hypermedia. Video compression cannot be performed effectively using most of the algorithms used to compress video sequences because the base/delta schemes they use can only work if all the video frames within a sequence don’t change. Editing out just one frame in the middle might make it impossible to rebuild an entire sequence. Authors using analog audio and video have a similar problem. The videodisk can’t be pressed until the audio and video have been edited. OMDR technology obviates this problem somewhat, because it allows authors to copy individual frames to OMDR disks.

Some compression techniques provide an intermediate video form, such as Real Time Video (DVI RTV), which provides a low-resolution, slow-frame-rate approximation of what the video will eventually look like, for use by the multimedia author. The author would determine frame sequencing by using this RTV, then send the video off for final edit, digitization and compression. [Luther, 1989]

Staff.

The production of a hypermedia system requires a wide combination of skills. As overall director, a hypermedia/interactive designer is required to coordinate the individual pieces. Not only are motion picture or video professionals, such as camera persons, light persons, and sound persons required, but so are graphic artists for creating animations and programmers for creating special purpose nodes.

Depending on the sophistication of the production staff, the developer may require the skills of an artist, video editor, audio producer, and other professionals to accomplish these creative tasks.

Conclusion

No technology can meet every need. Hypermedia is a good solution to some but not all problems. In some cases a non-link-based interactive approach is best. The dividing line between an interactive program and a hypermedia one is fine. A distinguishing feature is that an interactive program might have inputs which are not necessarily mouse clickable. For example, a flight simulator would have multiple variable controls, such as the position of the stick and the throttle. These could be implemented using hypermedia links, but the result would probably not be true to life.

In the case studies section at the end of this book, there are some truly lovely examples of effective applications of hypermedia. Also in the case studies see Wayne MacPhail’s discussion of why he chose not to use hypermedia, but instead plain vanilla hypertext, to develop his AIDs and Immigration hyperdocuments. All hypertext design is challenging. Hypermedia requires both hypertext design and multimedia design. Hypermedia implementation takes the skills, time and money that go into hypertext and multimedia combined. Because the storage and performance limitations imposed by hypermultimedia development and delivery platforms are severe, the key to a successful hypermedia is an ability to determine where to compromise on speed or quality.

About the Authors

Oliver L. Picher

Oliver L. Picher is an editor/analyst with Datapro Research Group in Delran, NJ. He was a member of the team that assembled the Datapro Consultant for the Macintosh, a SuperCard-based application, delivered on CD-ROM, that incorpo rates text, data, Macintosh screen recordings, and audio clips into a complete in formation service on Macintosh hardware and software.

A writer by training and a technologist by experience, Mr. Picher is awaiting the day when he can use an extensible, object-oriented, hypermedia database to do his research.

Emily Berk

Emily Berk is co-editor of this handbook and a principal in Armadillo Associates, Inc. of Philadelphia, PA. She has been a designer and programmer of interactive hyper- and multimedia since 1980. Some of her recent multimedia meanderings have taken her to work as Project Leader on the Words in the Neighborhood application at RCA Sarnoff Labs and as chief instigator of the Zoo Project, a HyperCard-based mapping program.

Joseph Devlin

Joseph Devlin is the other co-editor of this handbook and the other principal in Armadillo Associates, Inc. He has been a skeptical observer of the multimedia market since the 1970s, when he worked at Commodore International and Creative Computing Magazine.

Ken Pugh

Ken Pugh is President of Information Navigation, Inc., of Durham, NC.

References

Bastiaens, G. A. (1989). "The Development of CD-I". Boston Computer Society, "SIG Meeting Notes," New Media News, Winter.

Berk, E., and Devlin, J. ( 1990). "An Overview of Multimedia Computing. " Datapro Reports on Document Imaging Systems, Datapro Research. Delran, NJ. February.

Brunsman, S., Messerly, J., and Lammers, S. (1988). "Publishers, Multimedia, and Interactivity. " Interactive Multimedia. Eds. Ambron, S., and Hooper, K. Microsoft Press, Redmond, WA.

Bush, Vannevar (1945). "As We May Think. " The Atlantic Monthly 176.1 (July) p. 101-103.

Dillon, M. (1988). "Scripting for Interactive Multimedia CD Systems", Communications of the ACM 31.7 (July). (Special issue on hypertext.)

Evenson, S., et al. (1989). "Towards a Design Language for Representing Hypermedia Cues. " Proceedings Hypertext '89. November 5-7, 1989, Pittsburgh, PA. New York: ACM, pp. 83-92.

Fox, E. A. (1989). "The coming revolution in interactive digital video. " (Special Section in) Communications of the ACM: July.

Fraase, M. (1989). Macintosh Hypermedia: Vols. I-IV, Reference Guide. Scott, Foresman and Company, Glenview, IL.

Frenkel, K. A. (1989). "The next generation of interactive technologies. " Communications of the ACM July v32 n7 pp. 872-882.

Hodges, M. E., Sasnett, R. M., Harward, V. J. (1990). "Musings on multimedia. " UNIX Review Feb v8 n2 pp. 82-88.

Horn, R. E. (1989). "Mapping Hypertext: The Analysis, Organization, and Display of Knowledge for the Next Generation of On-Line Text and Graphics. " The Lexington Institute, Lexington, MA.

Lucky, R. (1989). Silicon Dreams: Information, Man and Machine. St. Martin's Press, NY.

Luther, A. C. (1989). Digital Video in the PC Environment. McGraw-Hill/lntertext Publications, NY.

Meyrowitz, N. (1988). "Issues in Designing a Hypermedia Document System. " Interactive Multimedia. Eds. Ambron, S., and Hooper, K. Microsoft Press, Redmond, WA.

Meyrowitz, N. (1990). "The Link to Tomorrow, " UNIX Review, vol. 8, no. 2, pp. 58-67.

Miller, D. C. (1986). "Finally It Works: Now It Must 'Play in Peoria', " Lambert, S., and Ropiequet, S. CD ROM: The New Papyrus. Microsoft Press, Redmond, WA.

Nelson, T. H. (1975). "Dream Machines: New Freedoms through Computer Screens: A Minority Report. " Computer Lib: You Can and Must Understand Computers. Published by the author.

Nielsen, J. (1989). "Hypertext II: Trip Report. " June.

Nielsen, J. (1990). Hypertext and Hypermedia. Academic Press Inc., Copenhagen, Denmark.

Parsloe, E. (1985). Interactive Video. Sigma Technical Press. Cheshire, U.K.

Philips International, Inc. (1988). Compact-Disc Interactive. McGraw-Hill, New York.

Sherman, C. (1988). The CD-ROM Handbook. McGraw-Hill, New York.

Smith, T. L. (1986). "Compressing and Digitizing Images, " Lambert, S., and Ropiequet; S. CD ROM: The New Papyrus. Microsoft Press, Redmond, WA.

Stork, C. (1988). "Interleaved Audio: The Next Step for CD-ROM. " CD Data Report, Feb., pp. 20-24.

Yankelovich, N., et al. (1988). "Issues in Designing a Hypermedia Document System: The Intermedia Case Study. " Interactive Multimedia: Visions of Multimedia for Developers, Educators, & Information Providers. Eds. Ambron, S., and Hooper, K. Microsoft Press, Redmond, WA, pp. 33-85.